Context: In the context of performance analysis, for example for a study of I/O response time, I want to analyze the flow of all wait events for 'db file sequential read', say for a group of sessions. What are the options?

- 10046 trace is the classic way. This provides all the details of the SQL execution including wait event details if I want to. One of the problem here is with the scalability of writing into trace files especially if I want to trace many and very active sessions, in addition possibly tracing for a long time. Moreover the amount of data in the traces is an overkill for what I need, as I am interested only in some particular aggregations of db file sequential read wait events.

- DTrace of Oracle sessions provides an elegant and direct way to tap into the wait event interface for real-time analysis (see this article for details). However this method is still niche and works best in Solaris, while I mostly work with Oracle on Linux.

- Sampling is a typical alternative to tracing. Oracle ASH does just that and is a great source of performance data. However Oracle ASH data is sampled at 1 Hz, too slow for capturing I/O events. (Note: ASH sampling rate can be increased by tweaking _ash_sampling_interval, although the highest available rate currently is 10 Hz, still too low for our purposes).

Sampling of wait event history data

Oracle's V$SESSION_WAIT_HISTORY provides details of the last 10 wait events for each session currently connected to the instance. The idea is to use this data as a series of ring buffers, indexed by Oracle's SIDs. How can we wrap around the buffers? It turns out that the underlying X$KSLWH table has a column that can be used just for that: KSLWHWAITID (the values in KSLWHWAITID are not reported in V$SESSION_WAIT_HISTORY unfortunately). Here below an example of some of the columns of interest in X$KSLWH:

| KSLWHSID | KSLWHWAITID | KSLWHETEXT | KSLWHETIME | KSLWHP1 | KSLWHP2 | KSLWHP3 |

|---|---|---|---|---|---|---|

| 42 | 21016 | db file sequential read | 300 | 25 | 148935 | 1 |

| 42 | 21015 | db file sequential read | 1020 | 25 | 47683 | 1 |

| 42 | 21014 | db file sequential read | 16024 | 25 | 124765 | 1 |

| 42 | 21013 | db file sequential read | 4100 | 25 | 57668 | 1 |

Example 1: fine-grained wait event latency histograms

Let's put our sampling technique at work for capturing latency data and then display the collected data with latency histograms. Note: latency histograms and their graphical representations are useful for studies of random read performance, see also this previous article on the subject.

The advantage of the method based on sampling X$KSLWH over the use of V$EVENT_HISTOGRAM are:

- The lowest bucket in V$EVENT_HISTOGRAM is 1 ms, which is too coarse for I/O coming from SSDs. By sampling we can reach to a finer scale (depending on the sampling rate, in practice ~100 micro seconds is easily reachable).

- We can apply a finer grain selection to the sessions we want to investigate, for example by limiting our sampling to one session or a group of sessions, as opposed to measuring the entire instance.

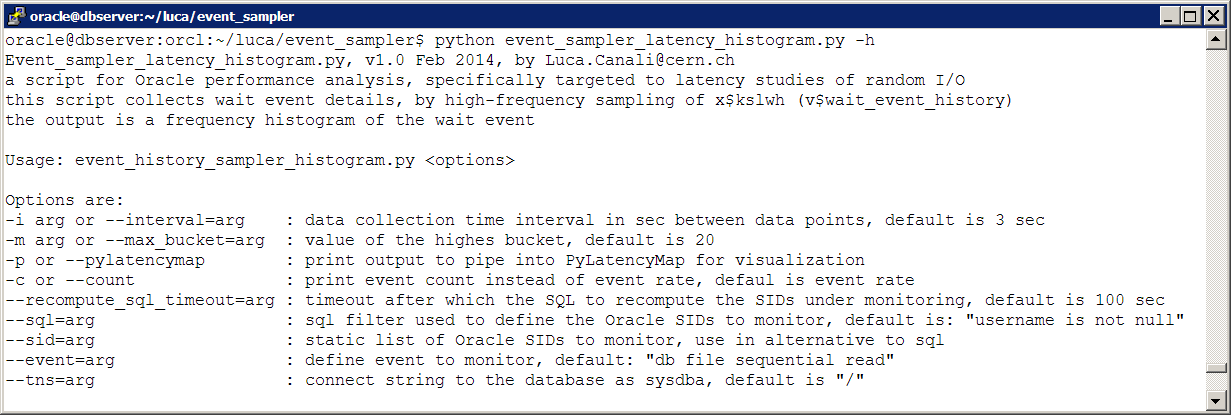

The tool: event_history_sampler_histogram.py is a python script that I wrote for this type of analysis. The script works by sampling X$KSLWH in a tight loop (which also mean it is CPU hungry). Here is a screenshot of the usage of this script with its options:

Here an example of the tool in actions with its output:

This script can be easily interfaced with PylatencyMap for heat map visualization. Here below is an example applied to displaying latency data of a workload generated with SLOB (note that the latency measurements are in microseconds):

$ python event_sampler_latency_histogram.py --sql="username like 'USER%'"|python ../PyLatencyMap/LatencyMap.py

Example 2: real-time analysis of all I/O instrumented by db file sequential read

The use case here is to analyze all the I/O performed via db file sequential read and perform real-time analysis of the data. This is similar to the case above but instead of limiting the analysis to the latency time we want also to make use of the information on which blocks have been read.

For example we can record all the blocks that have been read and instrumented using db file sequential read in addition to their latency details. Details of the block read are available as wait event parameters p1 and p2 (file number and block number respectively). We can collect the data using a technique similar to what was described above for the latency histograms example: that is by sampling X$KSLWH.

Some examples of what can we learn from this type analysis:

The use case here is to analyze all the I/O performed via db file sequential read and perform real-time analysis of the data. This is similar to the case above but instead of limiting the analysis to the latency time we want also to make use of the information on which blocks have been read.

For example we can record all the blocks that have been read and instrumented using db file sequential read in addition to their latency details. Details of the block read are available as wait event parameters p1 and p2 (file number and block number respectively). We can collect the data using a technique similar to what was described above for the latency histograms example: that is by sampling X$KSLWH.

Some examples of what can we learn from this type analysis:

- We can collect the list of all the blocks read in a given time interval. One of the simplest analysis is to count how may distinct blocks we have read. This gives an indication of how big the active area of the data set is for this workload.

- We can use the information on the size of the active data set as help for sizing the cache (for example for sizing the needs for SSD cache in the storage, or sizing the amount of RAM needed for efficiently buffer the workload).

- We can study physical IO based on latency, for example by measuring how much of the workload is reading 'new blocks' or instead reading multiple times the same set of data blocks.

- In the example below you can see that for each sampling interval (the default is a 10-second interval) we have info on:

- the number of distinct blocks sampled since the script has started collecting

- the number of blocks read during the latest collection interval having a latency <2 ms (presumably from SSD cache) and the drill-down details of whether they are new block reads or blocks that have been read before during sampling

- same as above but for blocks read with latency >=2 ms (presumably I/O coming from spindles)

- It's also straightforward to add custom analysis code in the python script to provide for different use case, if needs arise.

Limitations and additional comments

The main limitation of this method lies in the necessity to sample at high frequency, the higher the frequency the more events with low latency we can capture. In practice a frequency of 1 KHz seems good enough for the study of random reads. However this also means running the script in a 'tight loop' and consuming 1 core of CPU constantly during the execution of the script.

Moreover the execution time of the sampling query depends on the number of sessions monitored, therefore we will see lowering sampling rates as we increase the number of sampled sessions. In practice sampling up to a few hundred concurrent sessions at a time seems to give still good results.

Another limitation is due to the fact that we are only sampling db file sequential read waits and ignoring other wait events such as db file parallel read or in general multi-block and/or async IO events.

The script event_sampler_gather_IO_history.py works by collecting information on all blocks read during its execution. In the example above where information of 12M blocks was stored in a python dictionary, almost 2GB of RAM were used. The memory footprint of the script should be optimized in a future version, in the mean time memory consumption needs to be monitored when running this type of analysis.

Note for RAC: these scripts will only monitor activity in the instance where they are connecting to (basically it's like using V$ views as opposed to GV$).

The main limitation of this method lies in the necessity to sample at high frequency, the higher the frequency the more events with low latency we can capture. In practice a frequency of 1 KHz seems good enough for the study of random reads. However this also means running the script in a 'tight loop' and consuming 1 core of CPU constantly during the execution of the script.

Moreover the execution time of the sampling query depends on the number of sessions monitored, therefore we will see lowering sampling rates as we increase the number of sampled sessions. In practice sampling up to a few hundred concurrent sessions at a time seems to give still good results.

Another limitation is due to the fact that we are only sampling db file sequential read waits and ignoring other wait events such as db file parallel read or in general multi-block and/or async IO events.

The script event_sampler_gather_IO_history.py works by collecting information on all blocks read during its execution. In the example above where information of 12M blocks was stored in a python dictionary, almost 2GB of RAM were used. The memory footprint of the script should be optimized in a future version, in the mean time memory consumption needs to be monitored when running this type of analysis.

Note for RAC: these scripts will only monitor activity in the instance where they are connecting to (basically it's like using V$ views as opposed to GV$).

Download the scripts:

The scripts mentioned in this blog are available from http://cern.ch/canali/resources.htm

Feel free to download and try them out, however beware that they are still in a beta stage.

The scripts have been tested against Oracle 11.2.0.3, 11.2.04 and 12.1.0.1 on Linux RHEL5 and RHEL6 with Python 2.4 and 2.6 and cx_Oracle version 5.1.2.

Conclusions

This article describes the main ideas of a technique for performance analysis of Oracle wait event data based on sampling the wait event history data and in particular X$KSLWH to produce a wait event data stream for real-time analysis. This has been illustrated with two examples related to to the study of random read latency and to the study of random IO patterns. The scripts used for this analysis are written in python and available for free download.